Our machines are getting more intelligent and more capable of independent tasks, and they owe it to the rapidly growing fields of Artificial Intelligence and Machine Learning. However, both areas are incredibly complex and take time and effort to understand better.

This article explores Regression vs. Classification in Machine Learning, including the definitions, types, differences, and use cases.

The foremost leader in IT, IBM defines Machine Learning as “…a branch of artificial intelligence (AI) and computer science which focuses on the use of data and algorithms to imitate how humans learn, gradually improving its accuracy.”

Both Regression and Classification algorithms are known as Supervised Learning algorithms and are used to predict in Machine learning and work with labeled datasets. However, their differing approach to Machine Learning problems is their point of divergence.

Now let’s take an in-depth look into Regression vs Classification.

Regression in Machine Learning Explained

Regression finds correlations between dependent and independent variables. Therefore, regression algorithms help predict continuous variables such as house prices, market trends, weather patterns, oil and gas prices (a critical task these days!), etc.

The Regression algorithm’s task is finding the mapping function so we can map the input variable of “x” to the continuous output variable of “y.”

Classification in Machine Learning Explained

On the other hand, Classification is an algorithm that finds functions that help divide the dataset into classes based on various parameters. When using a Classification algorithm, a computer program gets taught on the training dataset and categorizes the data into various categories depending on what it learned.

Classification algorithms find the mapping function to map the “x” input to “y” discrete output. The algorithms estimate discrete values (in other words, binary values such as 0 and 1, yes and no, true or false, based on a particular set of independent variables. To put it another, more straightforward way, classification algorithms predict an event occurrence probability by fitting data to a logit function.

Classification algorithms are used for things like email and spam classification, predicting the willingness of bank customers to pay their loans, and identifying cancer tumor cells.

Types of Regression

Here are the types of Regression algorithms commonly found in the Machine Learning field:

- Decision Tree Regression: The primary purpose of this regression is to divide the dataset into smaller subsets. These subsets are created to plot the value of any data point connecting to the problem statement.

- Principal Components Regression: This regression technique is widely used. There are many independent variables, or multicollinearity exists in your data.

- Polynomial Regression: This type fits a non-linear equation by using the polynomial functions of an independent variable.

- Random Forest Regression: Random Forest regression is heavily used in Machine Learning. It uses multiple decision trees to predict the output. Random data points are chosen from the given dataset and used to build a decision tree via this algorithm.

- Simple Linear Regression: This type is the least complicated form of regression, where the dependent variable is continuous.

- Support Vector Regression: This regression type solves both linear and non-linear models. It uses non-linear kernel functions, like polynomials, to find an optimal solution for non-linear models.

Types of Classification

And here are the types of Classification algorithms typically used in Machine Learning:

- Decision Tree Classification: This type divides a dataset into segments based on particular feature variables. The divisions’ threshold values are typically the mean or mode of the feature variable in question if they happen to be numerical.

- K-Nearest Neighbors: This Classification type identifies the K nearest neighbors to a given observation point. It then uses K points to evaluate the proportions of each type of target variable and predicts the target variable that has the highest ratio.

- Logistic Regression: This classification type isn't complex so it can be easily adopted with minimal training. It predicts the probability of Y being associated with the X input variable.

- Naïve Bayes: This classifier is one of the most effective yet simplest algorithms. It’s based on Bayes’ theorem, which describes how event probability is evaluated based on the previous knowledge of conditions that could be related to the event.

- Random Forest Classification: Random forest processes many decision trees, each one predicting a value for target variable probability. You then arrive at the final output by averaging the probabilities.

- Support Vector Machines: This algorithm employs support vector classifiers with an exciting change, making it ideal for evaluating non-linear decision boundaries. This process is possible by enlarging feature variable space by employing special functions known as kernels.

The Difference Between Regression vs. Classification

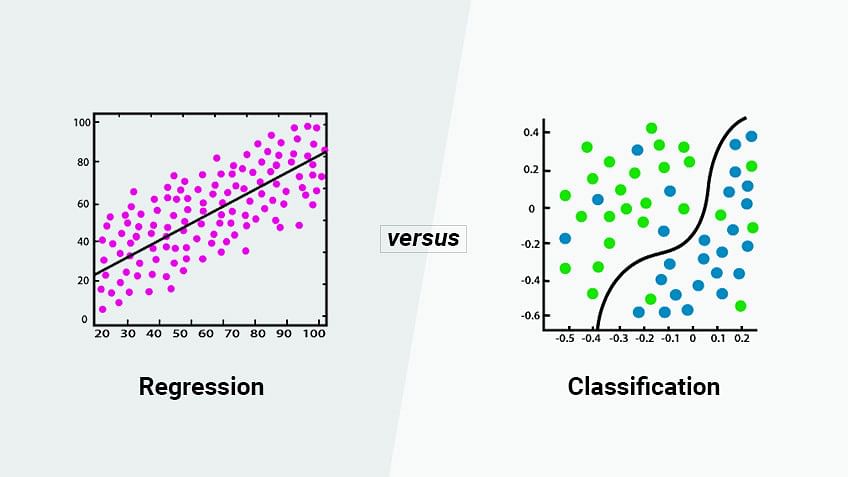

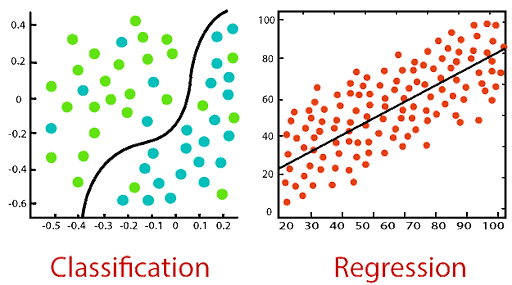

This image, courtesy of Javatpoint, illustrates Classification versus Regression algorithms.

This table shows the specific differences between Regression vs. Classification algorithms.

Regression Algorithms |

Classification Algorithms |

|

The output variable must be either continuous nature or real value. |

The output variable has to be a discrete value. |

|

The regression algorithm’s task is mapping input value (x) with continuous output variable (y). |

The classification algorithm’s task mapping the input value of x with the discrete output variable of y. |

|

They are used with continuous data. |

They are used with discrete data. |

|

It attempt to find the best fit line, which predicts the output more accurately. |

Classification tries to find the decision boundary, which divides the dataset into different classes. |

|

Regression algorithms solve regression problems such as house price predictions and weather predictions. |

Classification algorithms solve classification problems like identifying spam e-mails, spotting cancer cells, and speech recognition. |

|

We can further divide Regression algorithms into Linear and Non-linear Regression. |

We can further divide Classification algorithms into Binary Classifiers and Multi-class Classifiers. |

Now that we have the differences between Classification and Regression algorithms plainly mapped out, it’s time to see how they relate to decision trees. But before we do that, we need to ask an important question.

What’s a Decision Tree Algorithm, Anyway?

We can classify Machine Learning algorithms into two types: supervised and unsupervised. Decision making trees are a supervised Machine Learning algorithm. For example, decision-making trees are a supervised Machine Learning algorithm.

Decision tree algorithms are if-else statements used to predict a result based on the available data.

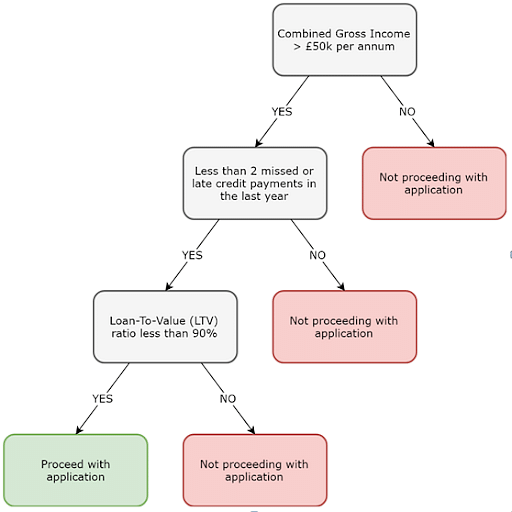

Here’s an example of a decision tree, courtesy of Hackerearth. We can use this decision tree to predict today's weather and see if it's a good idea to have a picnic.

Now that we have the definition of a basic decision tree squared away, we’re ready to delve into Classification and Regression Trees.

What’s the Difference Between a Classification and Regression Tree?

Classification and Regression trees, collectively known as CART, describe decision tree algorithms employed in Classification and Regression learning tasks. Leo Breiman, Jerome Friedman, Richard Olshen, and Charles Stone introduced the Classification and Regression tree methodology in 1984.

A Classification tree is an algorithm with either a fixed or categorical target variable. We can then use the algorithm to identify the most likely “class” a target variable will probably fall into. These algorithms are used to answer questions or solve problems such as “Who is most likely to sign up for this promotion?” or “Who will or will not pass this course?”

Both of those questions are simple binary classifications. The categorial dependent variable assumes only one of two possible, mutually exclusive values. However, you may have cases where you need a prediction that considers multiple variables, such as “Which of these four promotions will people probably sign up for?” In this case, the categorical dependent variable has multiple values.

Here’s a sample Classification tree that a mortgage lender would employ, courtesy of Datasciencecentral.

A Regression tree describes an algorithm that takes ordered values with continuous values and predicts the value. For example, you may want to predict a condominium’s selling price, a continuous dependent variable.

The selling price would depend on continuous factors like square footage and categorical factors like the condo's style, the property's location, and similar factors.

Here’s an example of a Regression tree, courtesy of Rpub. This tree calculates baseball player salaries.

As for the actual differences, Classification trees are used for handling problems dealing with classification results, and Regression trees work with prediction type problems. But let’s look closer at the differences.

Functionality

Classification trees divide the dataset based on homogeneity, such as a pair of variables. For instance, we could use two variables such as age and gender. If the training data showed that 85 percent of men liked a specific movie, the data is split at that point, and gender becomes a top node in the tree. That division makes the information 85% pure.

The Regression trees fit to the target variable using all the independent variables. The data of each independent variable is then divided at several points. The error between predicted and actual values gets squared at each point to arrive at a Sum of Squared Errors, or SSE. This SSE gets compared across all variables, and the point or variable with the lowest SSE becomes the split point, and the process continues recursively.

Regression vs. Classification: Advantages Over Standard Decision Trees

Both Classification and Regression decision trees generate accurate predictions using if-else conditions. Their benefits include:

- Simple results: It’s easy to observe and classify these results, making them easier to evaluate or explain to other people.

- They’re non-linear and nonparametric: Since both trees deal with simplistic results, they avoid implicit assumptions, making them well-suited for data mining functions.

- The trees implicitly perform feature selection: Variable screening, also called feature selection, is vital for analytics. The top few nodes of the decision tree are the most important, so these decision trees automatically handle feature selection.

Drawbacks of Classification and Regression Trees

No system is perfect. Classification and Regression decision trees bring their own challenges and limitations.

- They’re prone to overfitting: Overfitting happens when a tree considers the noise found in most data and results in inaccuracies.

- They’re prone to high variance: Even a tiny variance in data can lead to a high variance in the resulting prediction, creating an unstable outcome.

- They typically have low bias: Complex decision trees have characteristically low bias, making adding new data difficult.

When to Use Regression vs. Classification

We use Classification trees when the dataset must be divided into classes that belong to the response variable. In most cases, those classes are “Yes” or “No.” Thus, there are just two classes, and they are mutually exclusive. Of course, there sometimes may be more than two classes, but we just use a variant of the classification tree algorithm in those cases.

However, we use Regression trees when we have continuous response variables. For example, if the response variable is something like the value of an object or today’s temperature, we use a Regression tree.

Is a Decision Tree a Regression or a Classification Model?

It’s easy to spot which model is which. In short, a Regression decision tree model is used to predict continuous values, while a Classification decision tree model deals with a binary “either-or” situation.

Do You Want to Become a Machine Learning Professional?

If you’re looking for a career that combines a challenge, job security, and excellent compensation, look no further than the exciting and rapidly growing field of Machine Learning. We see more robots, self-driving cars, and intelligent application bots performing increasingly complex tasks every day.

Simplilearn can help you get into this fantastic field thanks to its AI and Machine Learning Course. This program features 58 hours of applied learning, interactive labs, four hands-on projects, and mentoring. You will receive an in-depth look at Machine Learning topics such as working with real-time data, developing supervised and unsupervised learning algorithms, Regression, Classification, and time series modeling. In addition, you will learn how to use Python to draw predictions from data.

Simplilearn also offers other Artificial Intelligence and Machine Learning related courses, such as the Caltech Artificial Intelligence Course

According to Glassdoor, Machine Learning Engineers in the United States can earn a yearly average of $123,764, while in India, a similar position offers an annual average of ₹1,000,000!

Let Simplilearn help you take your place in this amazing new world of Machine Learning and give you the tools to create a better future for your career. So, visit Simplilearn today and take those vital first steps!