Big Data refers to voluminous, unstructured, and complex data sets that can’t be processed using traditional data processing software. Such software falls short when it comes to handling big data capture, analysis, curation, sharing, visualization, security, and storage. Due to its unstructured nature, any attempt to use traditional software in big data integration leads to errors and clumsy operations. As such, big data platforms seek to handle data in more efficient ways while minimizing the margin of error, compared to how relational databases are used for normal data handling.

According to a 2019 big data article published in Forbes, over 150 zettabytes (150 trillion gigabytes) of data will need to be processed and analyzed by 2025. Furthermore, 40 percent of companies surveyed have pointed out that they frequently need to manage unstructured data. This demand will create serious issues for data handling, hence the need for frameworks like Hadoop. These tools make data analysis and processing more manageable.

Master the Big Data Ecosystem tools with Simplilearn's Big Data and Hadoop Certification Training Course. Enroll now!

What Is Hadoop?

Apache Hadoop is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. Hadoop is made up of four modules:

-

Hadoop Common

A set of supporting utilities and libraries for other Hadoop modules. -

Hadoop Distributed File System (HDFS™)

A distributed, fault-tolerant, auto replicating file system that stores data in clusters and provides quick access to stored data. -

Hadoop YARN

A processing layer that cumulatively takes care of resource management, job scheduling, and handles various processing needs. -

Hadoop MapReduce

IBM defines MapReduce as “the heart of Hadoop.” It is a batch-oriented rigid programming model that allows the processing of large datasets across a cluster of nodes/machines. It processes data in two phases: Mapping & Reducing. The Mapping phase is responsible for working on small chunks of data spread across the cluster by using the Mapper function. The Reducing phase is responsible for aggregating the data by using a reducer function.

Benefits of Using Hadoop

The value of timely data processing in business cannot be overstated. While several frameworks exist to further this end, companies that adopt Hadoop do so for the following reasons:

-

Scalability

Businesses can process and get value from petabytes of data warehoused in the HDFS -

Flexibility

Easy access to multiple data sources and data types -

Speed

Parallel processing and minimal movement of data allows large amounts of data to be processed with speed -

Adaptability

It supports a variety of coding languages, including Python, Java, and C++

A practical example of this is demonstrated in the use case below:

Business Use Case: SEARS

By 2013, Hadoop was in use in over half of the Fortune 500 companies, including Sears. The company wanted to analyze customer sentiment data, churn data, and POS transactions to find reliable insight. Implementing Hadoop was a move to “…personalize marketing campaigns, coupons, and offers down to the individual customer,” according to Phil Shelley, then VP and CTO of Sears.

However, the systems Sears was using at the time couldn’t support these objectives due to ETL limitations and shortcomings of the storage grid. The company was receiving a lot of data but could only access 10 percent of it to generate reports. The rest was too much to handle from the storage grid.

After implementing Hadoop, Sears could analyze ALL the data streaming in and was able to start gaining useful insights from it.

This is one example of companies that have revolutionized the way they use data, thanks to Hadoop. The framework sets the standard for data storage, processing, and analysis. It’s also cheaper by comparison, as it can run on low-cost hardware or the cloud.

What Is a Hadoop Developer?

A Hadoop developer is a professional who specializes in software development, precisely on Big Data, and specifically for the Hadoop ecosystem. Though responsibilities differ based on the years of experience, the duties of a Hadoop developer fall within this purview:

- Writing programs to suit system designs and developing solutions/Applications/APIs to solve business use cases

- Defining workflows

- Implementing solutions to review, mine, analyze logs or data

- Using cluster services within a Hadoop ecosystem

- Amass total knowledge of Hadoop Common and the Hadoop ecosystem in general

To be a Hadoop developer, you need skills such as:

- Problem-solving, from a programming perspective

- Architecting and designing

- Documenting

- Workflow designing, scheduling, and usage

- Data loading and all other facets of working with data in varied formats

Skills Needed to Learn Hadoop

Anyone can learn Big Data technologies and frameworks such as Hadoop as long as they’re committed to it and feel it will improve an aspect of their work or career growth prospectus. While there are no strict requirements for learning Hadoop, basic knowledge in the following areas will make it easier to grasp the course:

Programming Skills

Hadoop requires knowledge of several programming languages, depending on the role you want it to fulfill. For instance, R or Python are relevant for analysis, while Java is more relevant for development work. However, it is not uncommon to find beginners with a non-IT background or with no programming knowledge learning Hadoop from scratch.

SQL Knowledge

Knowledge of SQL is essential regardless of the role you want to pursue in Big Data. This is because many of the companies that were using RDBMS are now moving into the Big Data arena or integrating their existing infrastructure with a Big Data platform. Many existing data sets are in a structured format, through even unstructured data can be structured for processing needs. Moreover, Big Data platforms using the Hadoop ecosystem have packages such as Hive or Impala, and Spark components such as Spark SQL, all of which need knowledge of querying using SQL or SQL like querying languages. One can benefit from prior experience with, or knowledge of, SQL and can easily use newer tools and technologies to process large datasets without worrying about underlying processing frameworks.

Linux

Most of the Hadoop deployments across industries are Linux based, and thus, it’s helpful to have a prior basic working knowledge of Linux. Also, versions 2.2 and onwards of Hadoop have native Windows support.

Want to begin your career as a Data Engineer? Check out the Data Engineer Training and get certified.

Career Benefits of Doing Big Data and Hadoop Certification

Big Data Hadoop certification will propel your career in the right direction as long as you work in a data-intensive company. Some career benefits include:

1. Hadoop and Big Data Are Relevant for Professionals from Varying Backgrounds

The Hadoop ecosystem consists of tools and infrastructure that can be leveraged by professionals from diverse backgrounds. The growth of big data analytics continues to provide opportunities for professionals with a background in IT and data analysis.

Professions that benefit from this growth include:

- Software developers

- Software architects

- Data warehousing professionals

- Business analysts

- Database administrators

- Hadoop engineers

- Hadoop tester

For example:

- As a programmer, you can write MapReduce code and use Apache pig for scripting

- As an analyst or data scientist, you can use Hive to perform SQL queries on data

2. Hadoop Is on a High Growth Path

The Big Data landscape has grown over the years, and a notable number of large companies have adopted Hadoop to handle their big data analytics. This is because the Hadoop ecosystem encompasses several technologies necessary for a sound Big Data strategy.

The latest data from Google Trends shows that Hadoop and Big Data have held the same growth pattern over the last few years. This suggests that, for the foreseeable future, Hadoop will hold its importance as a tool for enabling better data-led decisions. As such, to become invaluable to any company (and hence hold elite roles such as Data Scientist, Big Data Engineer, etc.), it’s crucial to learn and grow proficient in all of the technologies encompassed by Hadoop.

Figure 1: Hadoop and big data growing at roughly the same average

3. High Demand, Better Pay

As mentioned above, Hadoop is cost-effective, speedy, scalable, and adaptable. The Hadoop ecosystem and its suite of technologies and packages such as Hive, Spark, Kafka, and Pig, support various use cases across industries and thus successfully add to Hadoop’s prominence.

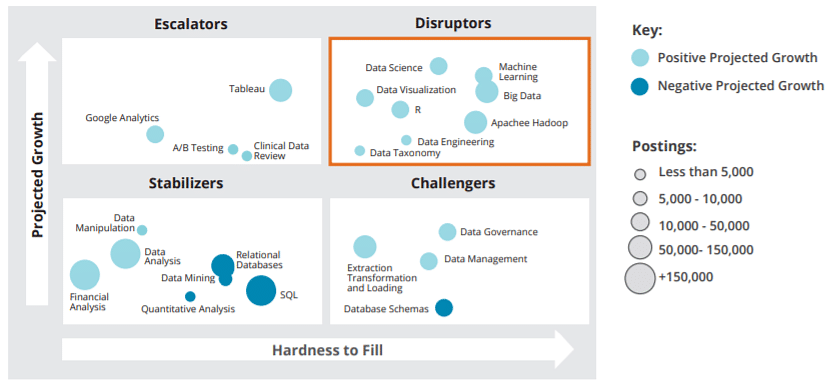

A report by IBM and Burning Glass technologies lists Apache Hadoop, MapReduce, Apache Hive, and Pig as some of the most in-demand and highest paying Data science skills. Having these skills will maximize your earning potential, keeping you well above the $100,000 salary range.

Figure 2: Hadoop is one of the highest paying analytical skills

The same report also lists Apache Hadoop as a “disruptor,” meaning that jobs classified under this quadrant “…. Present the biggest concern; in the future, they are the jobs most likely to suffer from supply shortages, which could prevent firms from utilizing Big Data to its full potential.” These skills also take long to hire, a factor that could be attributed to:

- A lack of relevant skills, or

- Failure to find the right combination of skills

Figure 3: Jobs requiring Apache Hadoop will be one of the most difficult to hire for in future

Having Big Data and Hadoop skills is essential, but for employers, the hiring process is more nuanced depending on the industry and role to be filled. Finding the right combination of domain knowledge and data analytics skills usually takes time.

Bottom Line: Your Learning Journey Continues

Given the above, it’s clear that there is real promise in learning Big Data and Hadoop. As long as we keep to generate data from all facets of our digital lives, software like Hadoop will have a place in Big Data processing.

A certificate is a good starting point for your Hadoop career, but you will need to put in some work to become an expert. You can do so by following these steps:

1. Keep Practicing

A comprehensive Hadoop course will give you hands-on practice scenarios. Don’t let your knowledge lie dormant as you wait to get a job. Set up a virtual machine after your course and continue practicing with more data sets.

Are you skilled enough for a Big Data career? Try answering these Big Data and Hadoop Developer Test Questions and find out now!

2. Follow Some Established Professionals or Companies

By following professionals in the industry, you will benefit in the following ways:

- Keeping up with current trends happening in your industry

- Getting help with troubleshooting

- Learning about the latest releases and what they mean for your career

3. Pursue an Advanced Course

Once you are proficient in Hadoop, pursue some advanced courses that will propel you to better career opportunities. It’s always advisable to start with a career goal, a certification path for achieving that goal, and key milestones to help you track your progress and keep you focused on your objective. A Big Data and Hadoop course endorses your technical skills working with Big Data tools and measures your knowledge of Hadoop. You gain hands-on experience working on live projects, learn problem-solving skills using Hadoop, and gain an edge over other job applicants.